Introduction

A computer network is a collection of various computing devices. The purpose of a computer network is sharing of data among computing devices.

In a computer network, put simply, we have a sender and a receiver. The sender and receiver can be thought of as machines sharing the data amongst themselves.

For a sender to send data to the receiver, a connection has to be established. The connection can be wired or wireless. Through this connection, the data moves from the sender machine to the receiver machine.

One thing to note is, the message sent by the sender should be read and understood by the receiver’s end. Or else, the whole activity becomes futile.

For that, there must be some protocols that must be running on the sender's machine as well as on the receiver’s machine. Protocols, in simple terms, are a set of instructions.

Protocols make it possible for proper communication. For example: if you’re talking with a person who knows only Russian and not English, but you are talking in English, then despite establishing a connection over the phone, there’s no communication. Protocols make communication happen.

But the question that should arise in your mind is, where are these sender and receiver present?

One scenario can be, the client and the server or the sender and the receiver residing on the same machine. The machine can be, let’s say your laptop. Suppose you type something on the keyboard and it shows on your monitor. So, in your case, the keyboard becomes the sender and the monitor becomes the receiver. But, since the act of typing and it is display on the screen are a part of inter-process communication do you feel this kind of communication requires a computer network?

NO!

The operating system will take care of such inter-process communication and not computer networks. Computer network will come into the picture only when the client is on a separate machine and the server is on a separate machine, sender and receiver in our case.

Our goal with computer networks is simple. The smoothness with which whatever we type on the keyboard shows promptly on the screen, the same level of smoothness is desired when the sender machine and the receiver machine are connected over a network.

This can be illustrated beautifully with one real-world example of Facebook. Suppose, you’re in India trying to open Mark Zuckerberg’s brainchild on your smartphone and the Facebook server is in the US. But the retrieval of your data is so fast on your smartphone, that you’re no longer astonished about the speed and accuracy with which the data is retrieved on your phone since you’re used to it. It seems like you’re accessing the data locally and not via a server located miles away from you. Our goal with computer networks is to create such environments where the duality of server and client is erased and both the client and server seem to be operating under the same roof. But actually, they’re not.

The basic functionality of a computer network is that the server and client machines should seem like one despite being physically away from each other.

Functionalities

There are two functionalities in a computer network,

Mandatory

Optional

Mandatory:

Error control: Does the message we’re sending to the receiver is what the receiver got? So this error control functionality should be so robust that you should be aware if there’s any error while data is being shared from the sender machine to the receiver.

Flow control: There should be some kind of constraint as to the amount of data being shared so that there’s no congestion.

The protocols running on your systems will provide you with these mandatory functionalities

Optional:

Encryption/decryption: Here we add a layer of security to our data sharing due to the fear of intruders. But not all the processes require such a level of security. Nowadays, we see a lot of banking applications that need some kind of encryption or decryption but in the process, it increases the system's complexity. With the mandatory functionalities too, the code to run them is present in the form of algorithms in the kernel of our OS, but now if we include the encryption and decryption algorithm code in the kernel, then it’s natural for the system complexity to rise. As a result, the time to share the data will also increase.

Checkpoint: It’s similar to milestones required when you’re downloading a file for example. If you’re downloading a file of 500MB and the download fails at 300MB, ideally, the download should begin from 300MB or near that value rather than zero. This is where checkpoint comes in handy.

There are more than 70 functionalities in mandatory and optional combined, almost impossible to discuss each one in this article as it is beyond the scope of this article.

Why do we need the OSI model?

The ISO made a standard model called the OSI model. It’s a theoretical model that encapsulated all these 70-odd functionalities within it so that whenever a message is being sent, it should follow these protocols set into the OSI model. And only after the message abides by these set protocols or functionalities, will the message leaves the system and go to the receiver.

Similarly, in the receiver’s machine, this message will follow the same protocols before reaching the user.

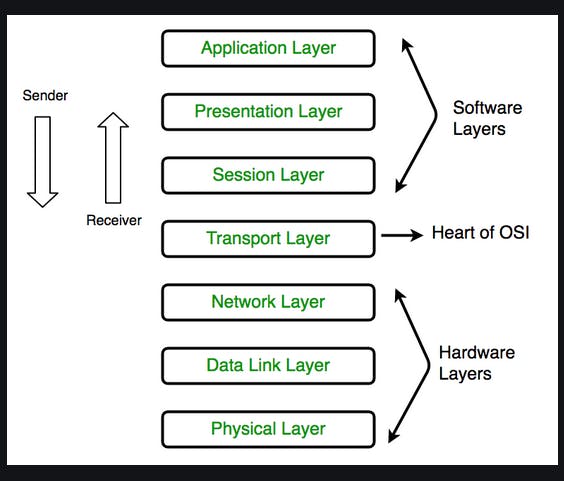

OSI Model( Open Systems interconnections):

7 layers make up the OSI model. The 70 odd functionalities, mandatory or optional, are distributed amongst these 7 layers.

So, whenever a message will be sent from a machine, it will traverse through all these 7 layers before going to the receiver’s machine